AI tools, mostly famously ChatGPT, have taken the world by storm. Chances are you’ve heard someone talking about AI. I mean, I’ve even heard my mum talking about it. But, what are people using AI for? Personally, I’ve used it to generate wrestling promos about Captain Falcon and as a tool to tell me the difference between two contracts for work. A couple largely harmless use cases that have helped me get some laughs out of friends, and double check my work.

There’s many more uses of ChatGPT though, and one of those is “saving time” by using it to generate text for you. A simple prompt entered into the machine can easily give you an entire paragraph back about the topic you’ve chosen, which seems like an amazing way to save time, and if you’re a business money. However, what you’re getting back from these AI models isn’t always going to be the best quality work, nor is it always guaranteed to be correct. So, here is how you can tell if something is written by AI.

Lack of personality and creativity

This is a little hard to explain, but whenever someone writes something they have a voice that comes through in their work, it’s all about the style of their writing. You normally pick this up from what you read, or how you talk, and that translates into how you write. Every person has their own unique voice, and that’s one thing that you’ll find lacking in anything written by AI.

Keeping that voice in mind it’s easy to see when some text has been AI generated because it feels very same-y. The sentences won’t have any variance. The lengths will all be about the same, and will follow the same structure. Look at the two paragraphs I generated below about Brisbane city.

The paragraphs discuss two entirely different things, but they maintain the exact same cadence in their writing. Why? Because it’s following the formula of how to write a paragraph.

People don’t typically write like that. Unless we’re writing an academic essay we write how we talk. Our sentences are inconsistent. We jump into things head first. It makes things more interesting to read.

Originality

If you’re as chronically as online as I am, you would have seen news about the WGA strike. Linked to that, you would have also seen people who only watch NCIS saying that the writers should get back to work, or AI is going to replace them all. It takes literally one minute to see why that isn’t the case.

AI offers zero originality. This makes sense when you remember that AI is just a program that is fed information and then regurgitates it. Which works perfectly when you want to ask it (and this is a word-for-word prompt I have done in my free time) ‘Can you please write a wrestling promo in the style of Hollywood Rock about beating schoolkids who are playing Kirby in Super Smash Bros while playing Captain Falcon?’

This works because the machine has watched all of The Rock’s promos, of which there is already a formula for, and then just applies a layer of Super Smash Bros on top. Which makes it good for a couple laughs between my friends and I.

But, you can’t expect the machine to bring any original ideas to the table because it has no life experience. And because of this it can’t really tell a compelling story. And it absolutely can not tell a joke that you aren’t force feeding it like the above example.

To make this incredibly clear you go to ChatGPT and ask it to write a scene from your favourite show. Give it a little prompt to work with and check out the output. There won’t be any jokes, or even a story beat. The AI will just write your exact prompt stretched out into a script format.

And we expect this to replace the countless years of life experience that the writers of our favourite shows bring to the table?

Misinformation

In recent years misinformation on the Internet has been on the rise. And while much of it is malicious what we’re talking about here isn’t. This isn’t the misleading-you-into-believing-a-certain-point-of-view misinformation; although you should absolutely be careful about that and always double check the sources of anything you read online. This is a much simpler fact that sometimes AI will just get things wrong.

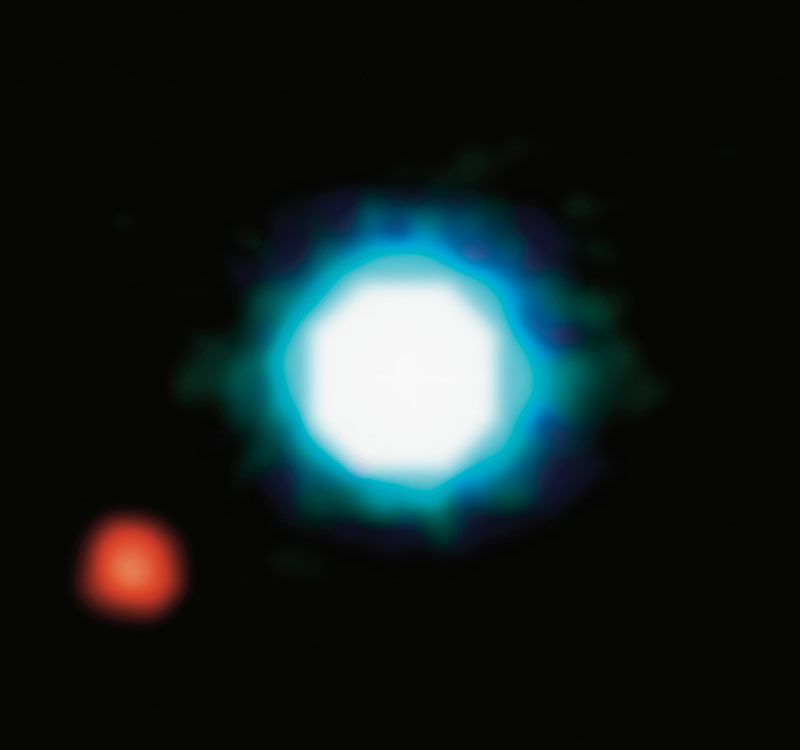

In Google’s first demo of its new AI tool Bard, there was an error. The Google published GIF on Twitter asked the AI about cool facts about the James Webb Telescope, to which it replied that it “took the very first pictures of a planet outside our solar system”. However the first image of a planet from outside our solar system came in 2004 from the VLT in Chile, and you can find that image publicly on NASA’s website.

So, if you ever think you’re reading something written by AI, or even if you ask it a question, just double check the facts you’re given. Or, in broader terms, don’t trust everything you see on the internet.

[Editors Note]: Before you head off to get that essay due tomorrow written by AI, why not check out Damo’s other sweet posts or even go subscribe to the TTANZ company YouTube channel.